Contents

Problem Statement

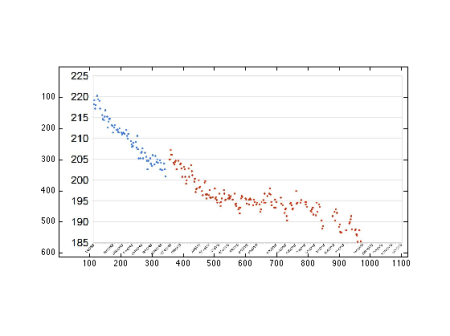

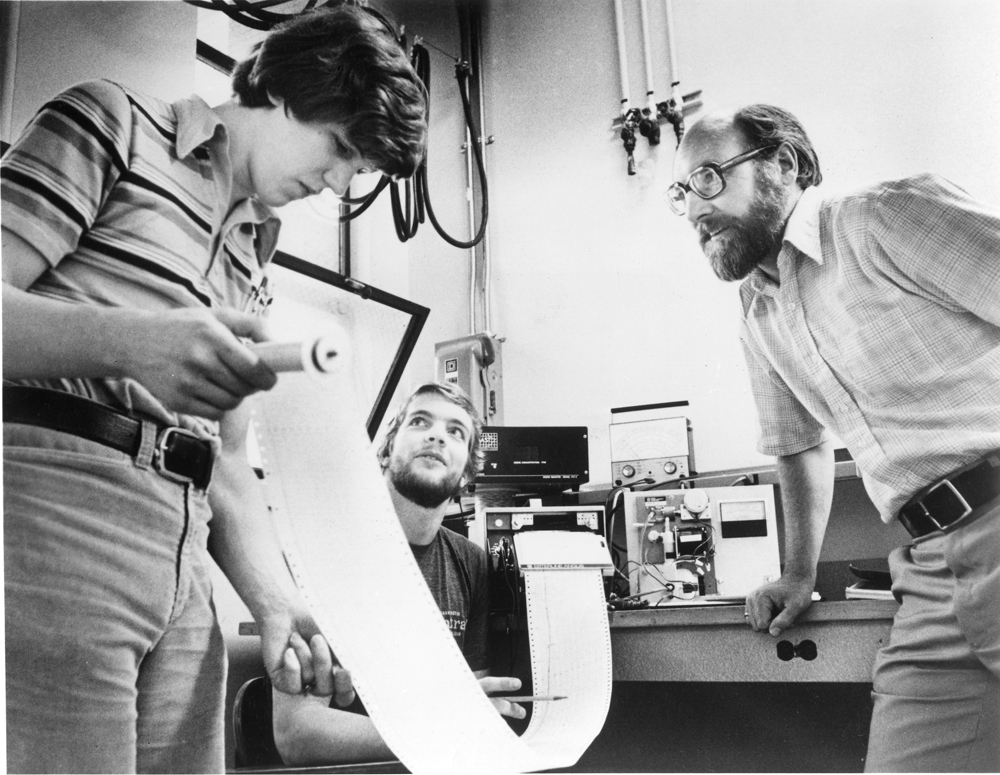

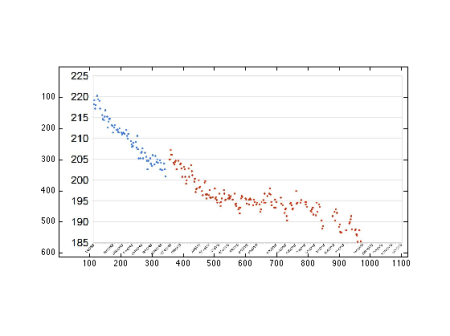

Between the Septembers of 2009 and 2010 I participated in an incentivised diet and exercise plan. My college roommate and I had both gained weight since college, and bet each other $20 every two weeks that the slope of our weight loss trajectory would be more negative than the other’s. We came out even, and each lost 30lbs.

Since then, I’ve kept the weight off, but not without the same kind of tracking that helped me get there. I now use the Withings Smart Body Analyzer to upload my weight readings to the cloud and track my trends.

This didn’t exist for me in 2009, though, and I was taking down all my weigh-ins manually. I lost some of this data, and recently found their only relic: a JPG with the data plotted with appropriate axes.

How could I programmatically extract the data from the picture, to recreate the raw data they represented, so I could upload it to my new, snazzy Withings profile?

Just like last time we surprisingly (or not?) get to use dmperm!

Load the file

I made the picture public back then to gloat about my progress.

I = imread('https://dl.dropboxusercontent.com/u/5355654/weightOverTheLastYear.jpg');

image(I); axis equal tight

image(I); axis equal tight, xlim([80,180]); ylim([80,180]);

Prepare the image

We can split out the different color channels and create a sz variable for later …

R = I(:,:,1); G = I(:,:,2); B = I(:,:,3); sz = size(R);

… as well as crop out the axes …

R(571:end,:) = 255; R(:,1:100) = 255;

G(571:end,:) = 255; G(:,1:100) = 255;

B(571:end,:) = 255; B(:,1:100) = 255;

… and check out a kind of grayscale version of the image:

D = sqrt(double(R).^2 + double(G).^2 + double(B).^2);

imagesc(D); axis equal tight; colorbar; colormap bone;

imagesc(D); axis equal tight, xlim([80,180]); ylim([80,180])

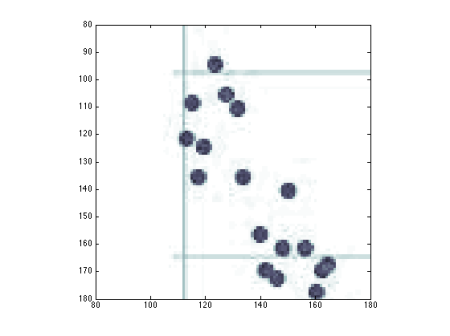

Detect the blobs

You can do blob detection on your own in MATLAB in a cinch / pinch. We first make a Gaussian kernel, and convolve it with our image to find help localize information packets that are about the size of our kernel:

k = @(x,y,t) 1 / (2*pi*t^2) * exp(- (x.^2+y.^2) / (2*t^2) );

[x,y] = meshgrid(-8:8,-8:8);

L = conv2(D,k(x,y,1),'same');

imagesc(L); axis equal tight; colorbar

imagesc(L); axis equal tight, xlim([80,180]); ylim([80,180]);

Then we take the laplacian, the sum of the second derivatives in both dimenions which helps us find the edges of these blobs. This assigns the appropriate sign to the data that is close in shape to our kernel. We do some mild trickery to keep the image the same size as the original:

Then we take the laplacian, the sum of the second derivatives in both dimenions which helps us find the edges of these blobs. This assigns the appropriate sign to the data that is close in shape to our kernel. We do some mild trickery to keep the image the same size as the original:

zh = zeros(1,sz(2));

zv = zeros(sz(1),1);

L2 = [zh ; diff(L,2,1) ; zh] + [zv diff(L,2,2) zv];

imagesc(L2); axis equal tight; colorbar

imagesc(L2); axis equal tight, xlim([80,180]); ylim([80,180]);

Adjacency matrices?

So we have “detected” our blobs, but we still need to find out where they are in the image. We do this by thresholding our laplacian data to find out the index locations in our matrix of every pixel that matters to us. We can then build an adjacency matrix. All of the pixels we care about are “connected” to themselves in this matrix. We also can take a look at all of the pixels above, below, to the left, and to the right of our pixels of interest to see if they are thresholded out of our interest or not. We make the matrix, A, sparse because it is HUGE and we know that many of it’s entries will be zero. Why store almost half a trillion zeros?!

T = L2 > 35;

spsz = [numel(T),numel(T)];

A = logical(sparse(spsz(1),spsz(2)));

idx = find(T);

[r,c] = ind2sub(sz,idx);

A(sub2ind(spsz,idx,idx)) = 1;

A(sub2ind(spsz,idx,sub2ind(sz,r+1,c))) = T(sub2ind(sz,r+1,c));

A(sub2ind(spsz,idx,sub2ind(sz,r-1,c))) = T(sub2ind(sz,r-1,c));

A(sub2ind(spsz,idx,sub2ind(sz,r,c+1))) = T(sub2ind(sz,r,c+1));

A(sub2ind(spsz,idx,sub2ind(sz,r,c-1))) = T(sub2ind(sz,r,c-1));

Learn about the blobs

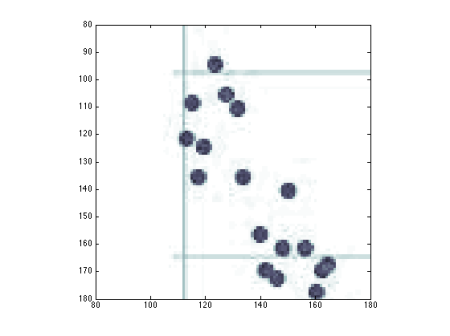

DMPERM to the rescue–we’ve made an adjacency matrix where the connected components are the blobs we care about! When we run it, we can look at each connected component, find the pixels that belong to, and average their locations. You can see each connected component and the “location” we’ve assigned each one. It’s not perfect, but it’s really close:

C = zeros(size(T));

[p,q,r,s] = dmperm(A);

n = numel(r)-1;

px = nan(n-2,1);

py = nan(n-2,1);

for> G=2:n-1

idx = p(r(G):r(G+1)-1);

[rows,cols] = ind2sub(sz,idx);

py(G-1) = mean(rows);

px(G-1) = mean(cols);

C(idx) = .25;

end

C(sub2ind(sz,round(py),round(px))) = 1;

imagesc(C); axis equal tight; colorbar

imagesc(C); axis equal tight, xlim([80,180]); ylim([80,180]);

Extract the real data

With all of that work done, we can prescribe how the pixel locations relate to weights and dates, and actually collect the real data from the image:

weights = interp1(30.5:67:566.5,225:-5:185,py);

dates = interp1([112,1097],[datenum('9-8-2009'),datenum('12-1-2010')],px);

dateStrs = datestr(dates,'yyyy-mm-dd HH:MM:SS');

f = fopen('weight.csv','w');

fprintf(f,'Date, Weight\n');

D = 1:numel(weights)

fprintf(f,'%s, %f\n',dateStrs(D,:),weights(D));

end

fclose(f);

plot(dates,weights,'.','MarkerSize',10), datetick

All in all, this is a pretty gross way to get at the underlying data, but it was fun to try and to get it working. What have you used DMPERM for recently? What have you used MATLAB or Image Processing for recently?